ADVISOR: Frédéric Devernay

TEL: 04 76 61 52 58

EMAIL: frederic.devernay at inria.fr

TEAM AND LAB: PRIMA team, Inria Rhône-Alpes & LIG

THEMES: Image, Vision

KEYWORDS: Computer vision, Panoramic imaging, Immersive media, Video processing

DURATION: 36 months

Above: Examples of panoramic video cameras by Immersive Media (Dodeca 2360), PtGrey (Ladybug) and Totavision

Panoramic stitching from photographs taken by a standard camera has been around for more than a decade, and many commercial or free stitching tools are now available which implement state-of-the-art stitching methods [1,4] (for example Autopano, Panorama Tools, or Hugin, Autodesk Stitcher). It consists in taking a set of photographs taken from a single point of view, covering a very large field of view, and assembling them to obtain a photographic panorama.

Panoramic video is a capture of live-action video covering a very large field of view, typically covering a hemisphere, a cylinder, or a full sphere. Sample applications for panoramic video include movies for planetariums or Omnimax (Totavision), or interactive movies (Kolor, Loop'in). The first real-life experiment in panoramic video was done at the 1900 Paris Exposition, by Raoul Grimoin-Sanson, where the panoramic movie was shot by 10 cameras suspended by a hot air balloon, and the resulting movie was projected on 10 9x9m screens covering a 360° cylinder. This process was later reintroduced by Disney in 1955 as "Circle-Vision 360°" for their theme parks, but the seams between the screens and the camera fields of view were clearly visible due to the filming and display setup. The only way to avoid visible seams is to have overlapping fields of view, and to apply stitching techniques to produce the final panoramic video, which was not possible before the advent of photograph stitching techniques. It is also worth mentioning that there exist solutions for panoramic video capture based on a single camera and using either a fish-eye lens or a curved mirror. However, the resulting image resolution is low because it is limited by the resolution of a single camera, and the image quality is not homogeneous due to geometric distortions and aberrations, which disqualifies these systems for the production of high-resolution immersive video.

For panoramic video, all images covering the panorama must be taken simultaneously (although some approaches use non-simultaneous images [16,17,18]), so that the hardware system usually consists of several high-resolution cameras [13,14,15,24], and each frame of the resulting video has to be stitched from all videos. Most notable commercial systems are the Dodeca 360 by Immersive Media, the Ladybug by Point Grey [25], the Totavision panoramic camera, and the Omnicam by Fraunhofer HHI. The PANOPTIC camera at EPFL [26] combines a hundred low-resolution CMOS sensors to build a high-resolution panoramic video, but its design is still very experimental. Low-resolution panoramic videos can also be acquired using a single camera and a catadioptric system [12,14], but the resulting image quality is not suitable for immersive video presentation, and their use is usually restricted to navigation or computer vision tasks.

Static stereoscopic 3D panoramas can be produced using a rotating camera with two vertical slits (or a pair of single-slit cameras) [19,12]. A slit camera can easily be simulated by taking only one column of pixels from a standard camera (or two separated colums to simulate a dual-slit camera). The resulting panorama can either be visualized on a cylindrical display with 3D glasses, or on any planar 3D screen using an interface such as a mouse or joystick for rotating the panorama.

Stereoscopic video panoramas are much harder to capture: Couture et al. [20] use a pair of rotating full-frame cameras to construct an Omnistereo video, taking inspiration from the panoramic video methods that use a single video camera to avoid visible seams in the resulting video [16,17,18]. However, not all directions can be captured simultaneously using this method, which is thus only applicable to repetitive motion and static camera positions. Weissig et al. [21] upgraded the Fraunhofer HHI Omnicam to produce live stereoscopic video panoramas, but the video panorama stitching problems becomes even more difficult in that setup, and is still unsolved. The US company JauntVR produces 3D stereoscopic panoramic videos, using a camera setup where each point is seen by at least 3 cameras, but building the 3D panoramic video still requires a lot of manual editing.

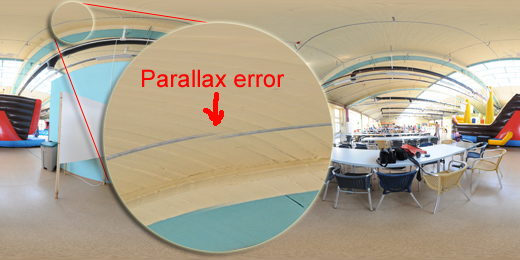

Image stitching consists of several phases: Image alignment, image warping, and blending. For static images, image alignment usually consists in computing 2D tranforms relating the various images, and warping simply consists in applying these transforms to the source images. The blending phase is usually considered trickier, and most methods focus on avoiding visible artifacts at this stage, such as color variations, double images due to moving or misaligned objects, unnatural edges or cut objects. Most artifacts present in panoramas are either due to images that were not taken simultaneously (which does not occur in panoramic video), or residual parallax due to the fact that the cameras' optical centers are not at the exact same position in 3D (which unfortunately happens in panoramic video stitching because several cameras cannot be physically at the same place and have overlapping areas).

Traditional photographic panorama blending techniques include feathering or alpha blending [1,25], optimal seam [2], laplacian pyramid blending [3,3b] or its simplification, two-band blending [4], and gradient domain blending [5,6]. Feathering and laplacian pyramid blending are linear operations, so that when the images change slowly (these changes may be temporal in case of videos) the output will change slowly, and artifacts appear in the blending areas as ghosts, double images or artificial discontinuities (see figure below). On the opposite, optimal seam [6b] and gradient domain blending [6] are the result of an optimization, and the optimum may jump abruptly from one local minimum to the other, even if the images change slowly. This second category should thus not be considered for panoramic video blending, because it would cause flickering or jitter in the overlapping areas, or the algorithms must be adapted to produce a temporally continuous output. However, even when using a method from the first category, a detail may slowly disappear from one place to reappear at another place in the images, so that the parallax effects have to be dealt with separately.

Several methods propose to compensate the geometric effects of parallax in static panoramas [7,8,9,10], but none of these addresses the problem of parallax compensation in video panoramas: temporal consistency of the resulting panorama sequences are not taken into account in the stitching process, so that temporal artifacts, which are very distracting for the human eye, may appear randomly in the resulting sequences.

Within this project we propose to compensate the effects of parallax before the blending stage, and then apply a specific blending method to minimize temporal artifacts. Using the stereo disparity or the optical flow between cameras in the overlap regions, we will apply view-interpolation techniques, combined with ideas borrowed from the laplacian pyramid blending, to produce panoramic videos that exhibit neither temporal nor spatial artifacts.

Camera calibration has to be fully 3D, as opposed to the usual 2D calibration which supposes identical optical centers and a pure rotation between cameras. This 3D calibration will allow: (a) to compute an optimal parallax-free geometric transform for a scene which is at a given distance (this transform will be used to have no parallax and thus no distortion on the main subject of the video, and will be applied in non-overlapping areas); (b) to know a priori the amount of displacement (in pixels) caused by parallax for a given depth range, and the direction of this displacement.

The parallax effects will be compensated by a novel method proposed within this project, called "geometric feathering". This method is inspired by recent work on spatial and/or temporal image interpolation methods [22,23], and computes a per-pixel transform to apply to each image in the overlapping zones. Additionally, if there is enough overlap between the fields of view of the cameras, this parallax will also be used to produce a 3D panorama, which can be visualized in immersive visualization devices such as the Oculus Rift, the Samsung Gear VR, or the Google Cardboard.

The algorithms that have to work on each frame of the panoramic video will be designed to be implemented on a GPU.

Above: the effect of parallax error on a stitched panoramic image.

Please send a motivation letter and a resumé (CV) by email to frederic.devernay at inria.fr with the subject line "[PhD] Super high resolution panoramic video processing".

[1] Richard Szeliski, Image Alignment and Stitching: A Tutorial, Microsoft Research Technical Report MSR-TR-2004-92, October 2004. http://research.microsoft.com/pubs/70092/tr-2004-92.pdf

[2] Efros, A., Freeman, W.: Image quilting for texture synthesis and transfer. Proceedings of SIGGRAPH 2001 (2001) 341-346. DOI=10.1145/383259.383296

[3] E. H. Adelson, C. H. Anderson , J. R. Bergen , P. J. Burt , J. M. Ogden: Pyramid Methods in Image Processing, Radio Corporation of America, RCA Engineer, 29(6):33-41 http://persci.mit.edu/pub_pdfs/RCA84.pdf

[3b] Peter J. Burt and Edward H. Adelson. 1983. A multiresolution spline with application to image mosaics. ACM Trans. Graph. 2, 4 (October 1983), 217-236. DOI=10.1145/245.247

[4] M. Brown and D. G. Lowe. 2003. Recognising Panoramas. In Proceedings of the Ninth IEEE International Conference on Computer Vision - Volume 2 (ICCV '03), Vol. 2. IEEE Computer Society, Washington, DC, USA, 1218-. DOI=10.1109/ICCV.2003.1238630 http://www.cs.ubc.ca/~lowe/papers/brown03.pdf

[5] Patrick Perez , Michel Gangnet and Andrew Blake, Poisson Image Editing ,Proc. Siggraph, 2003. DOI=10.1145/1201775.882269

[6] A. Levin, A. Zomet, S. Peleg and Y. Weiss. Seamless Image Stitching in the Gradient Domain. Proc. of the European Conference on Computer Vision (ECCV), Prague, May 2004. http://www.wisdom.weizmann.ac.il/~levina/papers/eccv04-blending.pdf

[6b] Brian Summa, Julien Tierny, and Valerio Pascucci. 2012. Panorama weaving: fast and flexible seam processing. ACM Trans. Graph. 31, 4, Article 83 (July 2012), 11 pages. DOI=10.1145/2185520.2185579

[7] Heung-Yeung Shum; Szeliski, R.; , "Construction and refinement of panoramic mosaics with global and local alignment," Sixth International Conference on Computer Vision, pp.953-956, 4-7 Jan 1998 http://research.microsoft.com/pubs/75611/ShumSzeliski-ICCV99.pdf

[8] Kang S. B., Szeliski R., Uyttendaele M.: Seamless Stitching Using Multi-Perspective Plane Sweep. Microsoft Research Technical Report MSR-TR-2004-48, June 2004. ftp://ftp.research.microsoft.com/pub/tr/TR-2004-48.pdf

[9] Michael Adam, Christoph Jung, Stefan Roth, and Guido Brunnett. Real-time stereo-image stitching using GPU-based belief propagation. In Proc. of the Workshop on Vision, Modeling and Visualization (VMV), Braunschweig, Germany, Nov. 2009 http://www.gris.tu-darmstadt.de/%7Esroth/pubs/vmv2009.pdf

[10] Alan Brunton, Chang Shu, "Belief Propagation for Panorama Generation," 3D Data Processing Visualization and Transmission, International Symposium on, pp. 885-892, Third International Symposium on 3D Data Processing, Visualization, and Transmission (3DPVT'06), 2006. DOI=10.1109/3DPVT.2006.36 http://nparc.cisti-icist.nrc-cnrc.gc.ca/npsi/ctrl?action=rtdoc&an=5765151

[11] Helmut Dersch, Panorama Tools, Open source software for Immersive Imaging, International VR Photography Conference 2007 in Berkeley, Keynote Address, http://webuser.fh-furtwangen.de/%7Edersch/IVRPA.pdf

[12] Peter Sturm, Srikumar Ramalingam, Jean-Philippe Tardif, Simone Gasparini and João Barreto (2011) "Camera Models and Fundamental Concepts Used in Geometric Computer Vision", Foundations and Trends® in Computer Graphics and Vision: Vol. 6: No 1-2, pp 1-183. DOI=10.1561/0600000023.

[13] Aditi Majumder, W. Brent Seales, M. Gopi, and Henry Fuchs. 1999. Immersive teleconferencing: a new algorithm to generate seamless panoramic video imagery. In Proceedings of the seventh ACM international conference on Multimedia (Part 1) (MULTIMEDIA '99). ACM, New York, NY, USA, 169-178. DOI=10.1145/319463.319485

[14] Simon Baker and Shree K. Nayar. 1999. A Theory of Single-Viewpoint Catadioptric Image Formation. Int. J. Comput. Vision 35, 2 (November 1999), 175-196. DOI=10.1023/A:1008128724364

[15] Kar-Han Tan, Hong Hua, and N. Ahuja. 2004. Multiview Panoramic Cameras Using Mirror Pyramids. IEEE Trans. Pattern Anal. Mach. Intell. 26, 7 (July 2004), 941-946. DOI=10.1109/TPAMI.2004.33

[16] Alex Rav-Acha, Yael Pritch, Dani Lischinski, and Shmuel Peleg. 2005. Dynamosaics: Video Mosaics with Non-Chronological Time. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'05) - Volume 1 - Volume 01 (CVPR '05), Vol. 1. IEEE Computer Society, Washington, DC, USA, 58-65. DOI=10.1109/CVPR.2005.137

[17] Aseem Agarwala, Ke Colin Zheng, Chris Pal, Maneesh Agrawala, Michael Cohen, Brian Curless, David Salesin, and Richard Szeliski. 2005. Panoramic video textures. ACM Trans. Graph. 24, 3 (July 2005), 821-827. DOI=10.1145/1073204.1073268

[18] M. El-Saban, M. Izz, A. Kaheel, Fast stitching of videos captured from freely moving devices by exploiting temporal redundancy, 17th IEEE International Conference on Image Processing (ICIP), 2010, 1193-1196. DOI=10.1109/ICIP.2010.5651811

[19] Shmuel Peleg, Moshe Ben-Ezra, and Yael Pritch. 2001. Omnistereo: Panoramic Stereo Imaging. IEEE Trans. Pattern Anal. Mach. Intell. 23, 3 (March 2001), 279-290. DOI=10.1109/34.910880

[20] Vincent Couture, Michael S. Langer, and Sebastien Roy. 2011. Panoramic stereo video textures. In Proceedings of the 2011 International Conference on Computer Vision (ICCV '11). IEEE Computer Society, Washington, DC, USA, 1251-1258. DOI=10.1109/ICCV.2011.6126376

[21] Christian Weissig, Oliver Schreer, Peter Eisert, and Peter Kauff. 2012. The ultimate immersive experience: panoramic 3D video acquisition. In Proceedings of the 18th international conference on Advances in Multimedia Modeling (MMM'12), Klaus Schoeffmann, Bernard Merialdo, Alexander G. Hauptmann, Chong-Wah Ngo, and Yiannis Andreopoulos (Eds.). Springer-Verlag, Berlin, Heidelberg, 671-681. DOI=10.1007/978-3-642-27355-1_72

[22] Miquel Farre, Oliver Wang, Manuel Lang, Nikolce Stefanoski, Alexander Hornung, and Aljoscha Smolic. 2011. Automatic content creation for multiview autostereoscopic displays using image domain warping. In Proceedings of the 2011 IEEE International Conference on Multimedia and Expo (ICME '11). IEEE Computer Society, Washington, DC, USA, 1-6. DOI=10.1109/ICME.2011.6012182

[23] Frédéric Devernay and Adrian Ramos Peon. 2010. Novel view synthesis for stereoscopic cinema: detecting and removing artifacts. In Proceedings of the 1st international workshop on 3D video processing (3DVP '10). ACM, New York, NY, USA, 25-30. DOI=10.1145/1877791.1877798

[24] J. Foote and D. Kimber, FlyCam: Practical Panoramic Video and Automatic Camera Control, in Proceedings of IEEE International Conference on Multimedia and Expo (III). 2000, 1419-1422. doi=10.1109/ICME.2000.871033 http://www.fxpal.com/publications/FXPAL-PR-00-090.pdf

[25] Point Grey, Overview of the Ladybug Image Stitching Process, Technical Application Note TAN2008010, Revised January 25, 2013 http://www.ptgrey.com/support/downloads/documents/TAN2008010_Overview_Ladybug_Image_Stitching.pdf

[26] Hossein Afshari, Laurent Jacques, Luigi Bagnato, Alexandre Schmid, Pierre Vandergheynst, Yusuf Leblebici, The PANOPTIC Camera: A Plenoptic Sensor with Real-Time Omnidirectional Capability, Journal of Signal Processing Systems (2013) 70:305-328 DOI=10.1007/s11265-012-0668-4

[27] Seon Joo Kim and Marc Pollefeys. 2008. Robust Radiometric Calibration and Vignetting Correction. IEEE Trans. Pattern Anal. Mach. Intell. 30, 4 (April 2008), 562-576. DOI=10.1109/TPAMI.2007.70732

[28] Jean-François Lalonde, Srinivasa G. Narasimhan, and Alexei A. Efros. 2010. What Do the Sun and the Sky Tell Us About the Camera?. Int. J. Comput. Vision 88, 1 (May 2010), 24-51. DOI=10.1007/s11263-009-0291-4

[29] Daniel B. Goldman. 2010. Vignette and Exposure Calibration and Compensation. IEEE Trans. Pattern Anal. Mach. Intell. 32, 12 (December 2010), 2276-2288. DOI=10.1109/TPAMI.2010.55